At work, a community group sent my department a directory to local businesses. It was in a spreadheet and it was a mess.

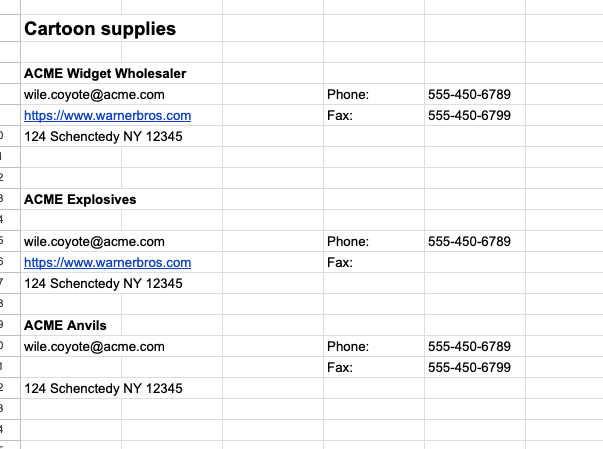

It looked something like this:

There was 5000 lines of this mess. There was some consistency, but not enough to make this and easy fix.

The first thing I did was download the google sheet as an HTML file. I combed through it to find some consistent

class names to iterate over and collect the data. I turned to Beautiful Soup to loop through the doc and pick out pieces of it to write to a CSV file. That worked ok, but things like email and Phone were on the same line. So they shared the same class name.

I fiddled with the HTML doc for a while but I realized I needed another option. I asked some people in the Python

slack channel. Someone suggested I use openpyxl. I'd never used it

before and since I was more familiar with Beautiful Soup I went there first. I started reading the docs for openpyxl

and threw together a few lines of practice code. It seemed to work pretty well. I was able to pick out rows and columns

to get data from. From there I could write in some logic to pick out the business names. In openpyxl, you can get

font data. worksheet[46][0].font.b This bit of code means you get the first cell of the 46th row of the specified

work sheet. The font.b property is to check for bold text. If True Then I could do another little loop from there.

So my code would go down the rows. When it hit a cell with bold text that was less than 14px it would run a short loop.

Every entry in the spreadsheet covered only 5 rows with 4 columns in each row. So when I reached a bold business

name, the code woud do a short loop of 5 rows. I would iterate through each columnt of the 5 rows. If the cell had a

value, I would pull that data and add it to a list. I ended up with a list about 7 or 8 elements long. Some of them

were a None value. But that was OK. I was able to get all the correct data related to the business name.

From there I wrote the list into a CSV file. It wasn't perfect, I had some columns mismatched. If a business didn't have

a fax then the line would sometimes have the physical address. The next step required some manual work. But it was easy

compared after I was able to automate the collection of all the data. In the final spreadsheet I would filter a

column by Fax: and a certain number of rows would rise to the top that were empty. In the next column was something

like the Pysical address or the URL. All I had to do was cut large blocks of data from the mismatche column and move it

to the right column. It took a little work but I was high on the success of scraping all the data, a little manual work

didn't matter. Ultimately my boss was happy with the list. However I found out later, the creator of the messy

spreadsheet sent and updated version with more organization. Oh well. next time I'll work faster.